Training large Deep Neural Networks

- Agilytics

- Nov 29, 2018

- 1 min read

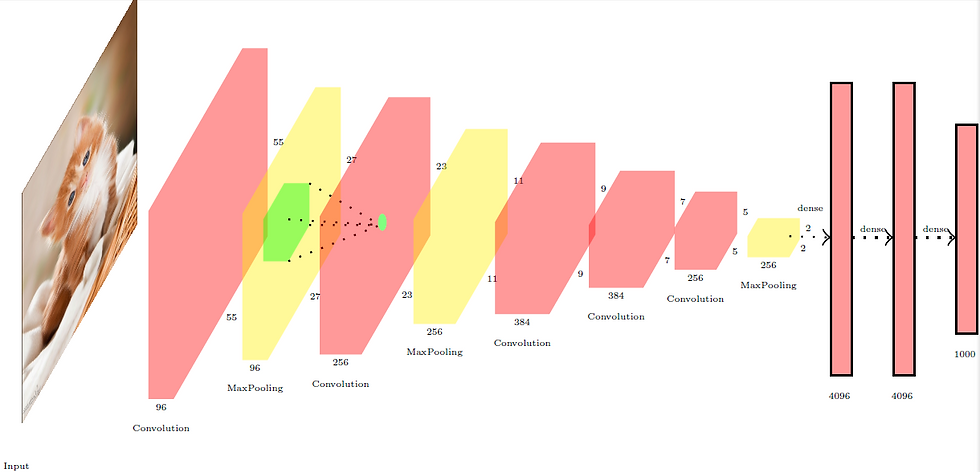

There have been great advances in training large, deep neural networks (DNNs), including

remarkable successes in training convolutional neural networks (convnets) to recognize

natural images.

Neural networks have long been known as “black boxes” because it is difficult to understand exactly how any particular, trained neural network functions due to the large number

of interacting, non-linear parts.

Agilytics recommends to take advantage of off-the-shelf

software packages — like Theano, Pylearn2, Caffe, and PyTorch in new domains.

Consider some neurons in a given layer of a CNN.

We can feed in images to this CNN and identify the images which cause

these neurons to fire. We can then trace back to the patch in the image which causes these neurons to fire.

Consider 6 neurons in the pool5 layer and find the image patches which

cause these neurons to fire

One neuron fires for people faces.

Another neuron fires for dog faces.

Another neuron fires for flowers.

Another neuron fires for numbers.

Another neuron fires for houses.

Another neuron fires for shiny surfaces.

One of the most interesting conclusions has

been that representations on some layers seem to be

surprisingly local. Instead of finding distributed representations

on all layers, Agilytics see, for example, detectors

for faces, flowers, numbers, houses, and shiny surfaces in pool5.

Write to us to bd@agilytics.in for better understanding of Deep Neural Networks and advances.

Comments